The Art of the AI Conversation: How We're Teaching Coders to Understand Us

_Current AI assistants are powerful but brittle, often failing in subtle ways. The solution isn't just bigger models, but a deeper, more collaborative dialogue ...

Current AI assistants are powerful but brittle, often failing in subtle ways. The solution isn't just bigger models, but a deeper, more collaborative dialogue based on the principles of human communication.

Published: 2025-11-16

Summary

AI-assisted programming, or "vibe coding," promises to revolutionize software development by translating natural language into functional code. Yet, anyone who has used these tools knows the frustration of being subtly misunderstood. The AI might ignore a key constraint, misinterpret an ambiguous term, or silently go off track. The core issue is a communication breakdown. This article explores the concept of "mutual grounding," a theory from human communication, as a framework for building a true partnership between developers and AI. We examine three concrete techniques that move beyond simple prompting: enabling the AI to explain its reasoning, ask clarifying questions, and allow users to steer its attention. These methods don't just patch the flaws of current models; they point toward a future where human-AI collaboration is more transparent, reliable, and effective, ensuring that the human remains the architect of intent, not just a debugger of the AI's mistakes.

Key Takeaways

- The central challenge in AI-assisted programming is the communication gap between ambiguous human language and the precise requirements of code.

- This isn't a new problem; computer scientists like Edsger Dijkstra warned about the pitfalls of natural language programming decades ago.

- The concept of "mutual grounding," borrowed from human communication theory, offers a path forward by establishing a shared understanding between the user and the AI.

- Effective techniques include making the AI explain its generated code step-by-step, allowing for feedback on its reasoning, not just the final output.

- Enabling the AI to identify ambiguity and ask clarifying, multiple-choice questions can proactively prevent errors and reduce model hallucination.

- A novel method called "Selective Prompt Anchoring" gives users a way to steer the AI's attention, emphasizing critical parts of a prompt without complex re-phrasing.

- Even as AI models become more powerful, these interaction paradigms will remain crucial for validation and debugging, as 100% accuracy is an unlikely near-term outcome.

- The future of AI development tools lies in designing better collaborative systems, not just more powerful generative models.

The Frustration of Being Misunderstood by a Machine

There's a certain magic to modern AI-assisted programming. You describe a task in plain English—"count the number of uppercase vowels at even indices in a string"—and a fully formed block of code appears. This practice, sometimes called "vibe coding," feels like a leap into the future, a world where the tedious mechanics of syntax are handled by a machine, freeing us to focus on pure logic and creativity.

But the magic is brittle. Too often, the AI returns code that is plausible, runnable, and subtly wrong. In the example above, it might count all vowels, completely ignoring the "uppercase" constraint. You try again, rephrasing the prompt, adding emphasis. The AI apologizes and generates new code, which is also wrong, but in a different way. The conversation becomes a frustrating loop of clarification and correction, shifting the burden from writing code to debugging the AI's interpretation.

This isn't a failure of the AI's power, but of its ability to communicate. The core challenge of AI-assisted programming is not just about generating code; it's about establishing a shared understanding between a human mind and a silicon one. The solution, it turns out, may lie in making the interaction less like giving orders to a tool and more like having a conversation with a collaborator.

The core challenge is translating ambiguous human intent into precise, formal instructions a machine can execute.

An Old Debate in a New Era

The dream of programming in natural language is nearly as old as programming itself. In the early 1970s, the LUNAR system allowed geologists to query data on moon rocks using plain English, translating their questions into formal database queries . But the idea has always had its skeptics.

Famed computer scientist Edsger Dijkstra, a fierce advocate for mathematical rigor in programming, called natural language programming a "foolish idea" . He argued that a fundamental tension exists between the inherent ambiguity of human language and the absolute precision required by a formal programming language. For Dijkstra, the goal wasn't to accommodate the messiness of natural language, but to create better, more expressive formal languages that elevate human thought to be more precise.

For half a century, Dijkstra's view largely won out. The industry progressed by creating higher-level languages like Python and Java, not by trying to make computers understand English. But the rise of large language models (LLMs) has forcefully reopened the debate. For the first time, we have systems that are remarkably adept at navigating the ambiguity of language. Yet, as Dijkstra predicted, the core problem of ambiguity hasn't vanished—it has simply become a probabilistic error rate.

Two fundamental challenges persist:

- Inherent Ambiguity: A single natural language instruction can map to many different valid programs.

- Infinite Space: The universe of possible programs is vast and complex, making it difficult for an AI to search for the correct one without precise guidance.

From Monologue to Dialogue: The Power of Mutual Grounding

Today's interactions with coding assistants are often a one-way street. The human issues a command, and the AI responds. If the response is wrong, the human issues a new, more detailed command. This linear monologue places the entire burden of clarity on the user.

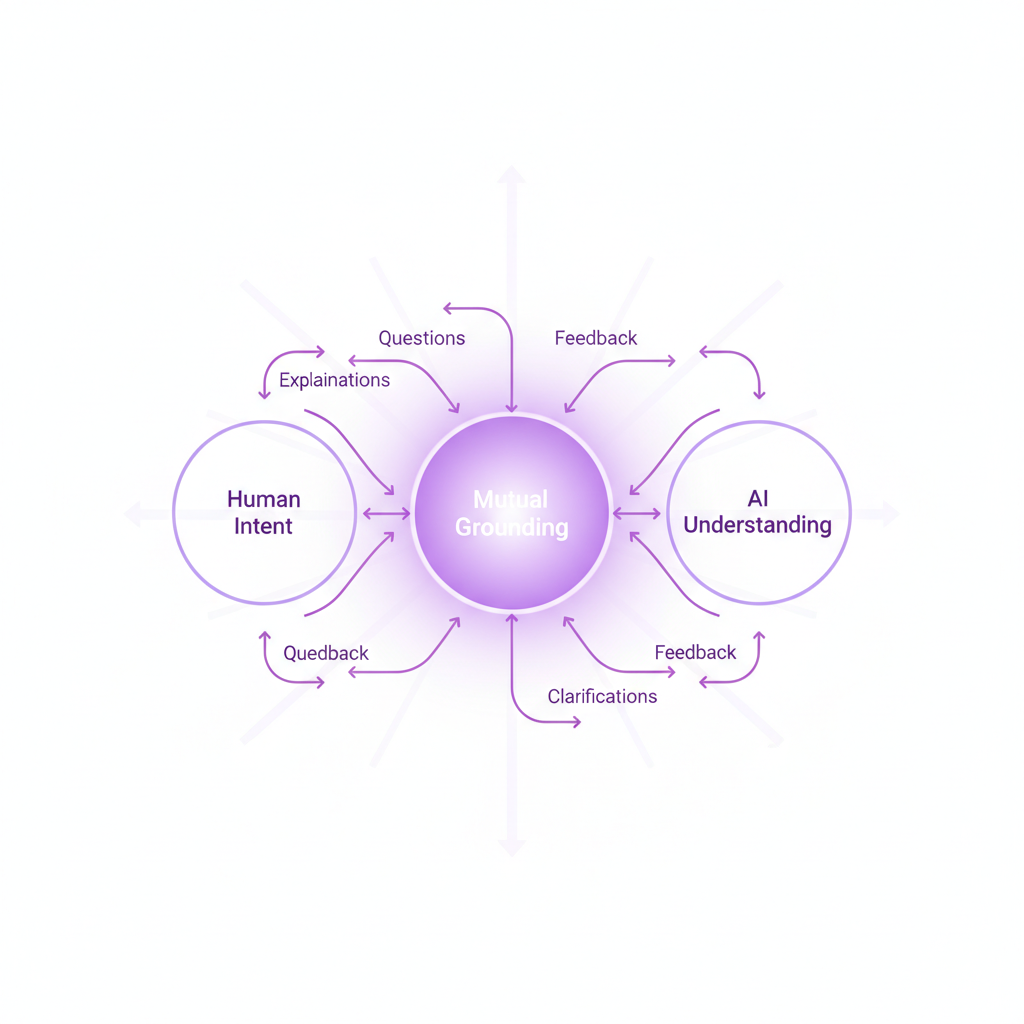

Human collaboration works differently. When people work together on a complex task, they engage in a dynamic process of establishing a shared understanding. Psychologists Herbert H. Clark and Susan E. Brennan call this "grounding" . We confirm what we've heard ("So, you want me to focus on the uppercase vowels?"), we ask for clarification when confused ("When you say 'correlation,' which statistical method should I use?"), and we use emphasis to signal importance. This back-and-forth is how we build a reliable, shared mental model of the task.

Inspired by this theory, researchers are developing new interaction paradigms to establish mutual grounding between programmers and LLMs. The goal is twofold: align the AI with the human's intent, and help the human understand the AI's "mental state"—why it gets stuck, what it misunderstands, and how to provide the most effective feedback.

Inspired by human communication, mutual grounding aims to create a shared mental model through iterative dialogue.

Three Techniques for a Better AI Conversation

Moving from a simple prompt-response cycle to a grounded conversation requires new tools. Here are three promising techniques that are reshaping human-AI collaboration in programming.

Technique 1: Making the AI Explain Itself

An old saying advises, "actions speak louder than words." In the context of an LLM, the code it generates is a direct reflection of its interpretation of your request. The problem is that for a novice programmer, or even an expert reviewing unfamiliar code, the logic behind those actions isn't always clear.

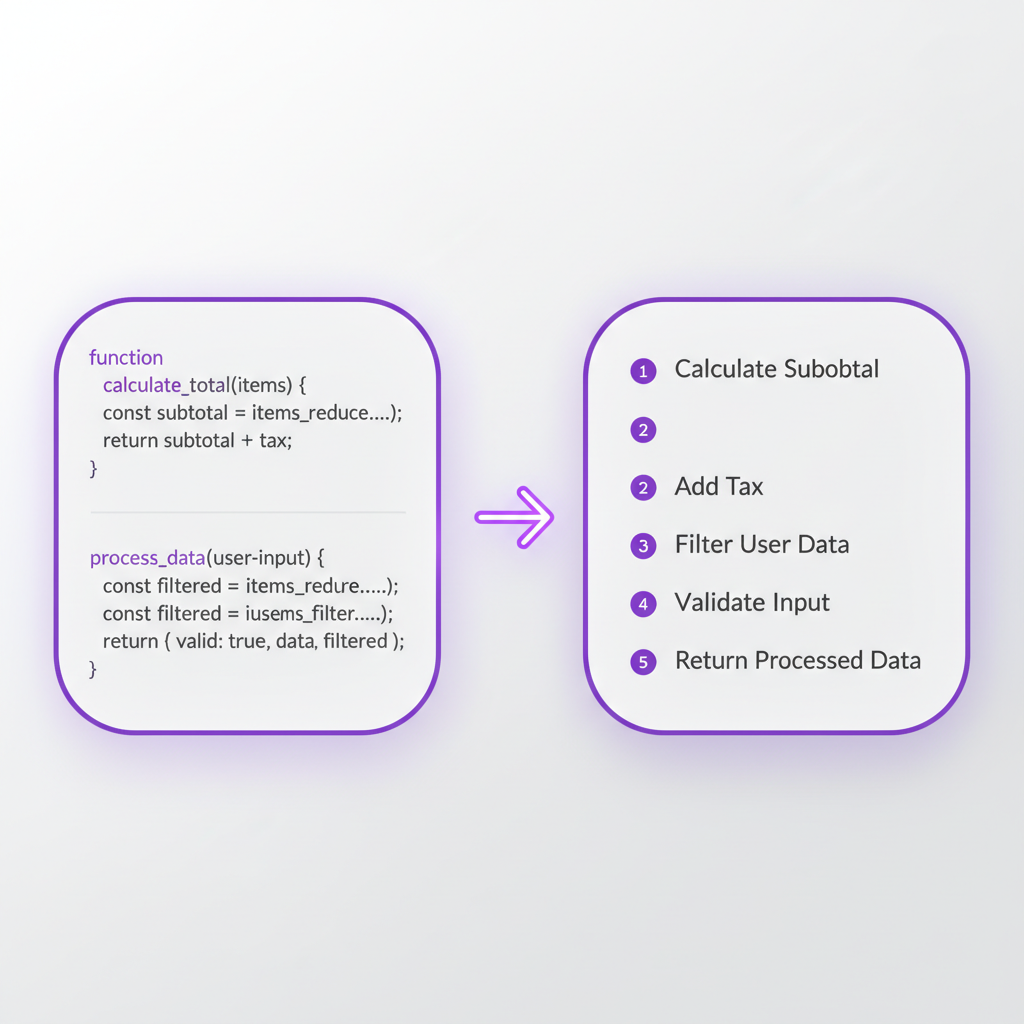

A powerful grounding technique is to have the AI generate the code and then immediately explain its own work back to the user in a step-by-step natural language summary .

For example, if a user asks for a complex SQL query, the system would not only produce the query but also a breakdown:

- First, I will select the 'students' table.

- Then, I will filter for students where the 'enrollment_year' is 2024.

- Next, I will join this with the 'courses' table on the 'student_id' column.

- Finally, I will group the results by 'major' and count the number of students.

This explanation serves as a scaffold for feedback. A user can immediately spot a misunderstanding in the reasoning—"Ah, you should have filtered for 2025, not 2024"—without needing to parse the complexities of SQL syntax. They can even edit the natural language step, and the system can use that precise feedback to correct only the relevant part of the code.

Studies have shown this approach not only improves task success rates but also narrows the performance gap between expert and novice users, as it translates the AI's logic into a universally understandable format .

By explaining its logic, the AI allows users to debug its reasoning, not just its syntax.

Technique 2: Teaching the AI to Ask for Help

Instead of guessing and generating a potentially wrong answer, a good collaborator knows when to ask for more information. Researchers have been working on enabling LLMs to do the same.

Simply prompting an AI, "Is my request ambiguous?" is ineffective. A better approach is a multi-step process where the AI first attempts to interpret the user's intent and then, if it detects ambiguity, generates a clarification question.

Crucially, these are not open-ended questions. Following the design principle of "recognition over recall," the system presents the user with a multiple-choice question based on the most likely interpretations .

Imagine a data analyst using a tool called Dango to clean a dataset. They type, "Measure the correlation between user engagement and in-app purchases." The term "correlation" is ambiguous. Instead of making an assumption, the AI asks:

It seems you want to measure correlation. Which method should I use? A) Pearson correlation (for linear relationships) B) Spearman correlation (for monotonic relationships) C) Run a t-test (to compare means) D) Other (please specify)

This simple interaction proactively resolves ambiguity, saving the user from having to correct a faulty assumption later. A user study found that this clarification mechanism significantly reduced model hallucinations and helped users complete tasks more quickly and accurately .

Technique 3: Giving the User an 'Attention Dial'

Sometimes, the AI understands all the words in a prompt but fails to assign the correct importance to them. It might latch onto a familiar pattern and ignore a critical, but subtle, constraint. To solve this, researchers developed a method called Selective Prompt Anchoring (SPAR), which gives the user a way to steer the model's attention .

This technique allows a user to highlight a specific word or phrase in their prompt—like "uppercase"—to tell the model, "This part is especially important. Pay more attention to it."

Selective Prompt Anchoring allows users to tell the AI which parts of an instruction are most critical.

The implementation is clever. Directly manipulating the thousands of attention heads inside a massive transformer model is computationally expensive and complex. Instead, SPAR works by manipulating the model's final output probabilities (known as logits). It runs the generation process twice in parallel: once with the full prompt and once with the anchored word masked out. By comparing the difference in the output probabilities between the two runs, the system can calculate the influence of the anchored word. It then amplifies that influence, effectively "turning up the dial" on that specific concept.

This method has been shown to consistently improve the performance of various code-generation models on difficult benchmarks. It's a low-overhead way to inject human intuition about what matters most, guiding the model to the correct interpretation without lengthy re-prompting.

Why Better Conversations Matter, Even When AIs Get Smarter

A common question is whether these interaction techniques are just temporary fixes for today's flawed models. In five or ten years, won't foundation models be so powerful that they simply "get it right" the first time?

This is unlikely. The better analogy for an LLM is not a calculator, but a brilliant but sometimes erratic junior collaborator. A compiler, for instance, is a tool we trust implicitly because it is 100% accurate in translating high-level code to machine code. We don't double-check its output. LLMs, by their probabilistic nature, are not and may never be 100% reliable. They will always have failure modes, some of them unpredictable.

Because of this, the human will always need to be in the loop for verification, validation, and debugging. The critical question is not if we will need to interact with AIs, but how. The goal of mutual grounding is to make that interaction as efficient and painless as possible.

We are moving beyond the era of "prompt engineering" as a dark art of finding the magic words to coax a model into compliance. The future lies in designing transparent, controllable, and collaborative systems. By building tools that can explain themselves, ask for help, and listen to our emphasis, we can transform AI from a powerful but unpredictable tool into a true creative partner. I take on a small number of AI insights projects (think product or market research) each quarter. If youre working on something meaningful, lets talk. Subscribe or comment if this added value.

Citations

- The LUNAR questioning system - BBN Report No. 2362, Bolt Beranek and Newman Inc. (whitepaper, 1972-06-15) https://dl.acm.org/doi/10.5555/1624775.1624791

- Provides historical context for the LUNAR system, one of the earliest natural language programming interfaces, as mentioned in the talk.

- On the foolishness of 'natural language programming' - E.W. Dijkstra Archive (documentation, 1978-09-12) https://www.cs.utexas.edu/users/EWD/transcriptions/EWD06xx/EWD667.html

- The primary source for Dijkstra's famous critique of natural language programming, establishing the historical counterargument.

- Grounding in communication - Shared minds: The new technologies of collaboration (book, 1991-01-01) https://psycnet.apa.org/record/1992-18330-001

- The seminal paper by Clark and Brennan that introduced the theory of grounding in communication, which is the theoretical foundation for the speaker's work.

- NL2Code: A Human-in-the-Loop Framework for Natural Language to Code Generation - arXiv (journal, 2023-05-04) https://arxiv.org/abs/2305.02752

- This paper from the speaker's research group details the method of generating step-by-step explanations for code, which corresponds to the first technique discussed.

- Dango: A Human-in-the-Loop Framework for Data Wrangling with Large Language Models - arXiv (journal, 2023-10-03) https://arxiv.org/abs/2310.01973

- This paper introduces the Dango system and the clarification question mechanism, corresponding to the second technique. It also contains the user study results mentioned.

- Selective Prompt Anchoring for Controllable Text Generation - arXiv (journal, 2024-05-23) https://arxiv.org/abs/2405.14753

- This paper details the SPAR method for attention steering via logit manipulation, which is the third technique discussed. It provides the technical background and evaluation results.

- AI Alignment: Ensuring AI Behaves as Intended - QualZ (org, 2024-05-20) https://qualz.ai/ai-alignment-ensuring-ai-behaves-as-intended/

- Provides broader context on the problem of aligning AI behavior with human intent, which is the overarching goal of the mutual grounding techniques discussed.

- AI-assisted programming: The new programming workflow - PNAS (journal, 2024-05-06) https://www.pnas.org/doi/10.1073/pnas.2310703121

- A recent perspective piece on how AI is changing programming workflows, providing contemporary context for the importance of human-AI interaction research.

- How to talk to your AI - MIT Technology Review (news, 2024-02-21) https://www.technologyreview.com/2024/02/21/1088204/how-to-talk-to-your-ai/

- Discusses the evolution of interacting with AI, moving beyond simple prompts to more sophisticated conversational techniques, aligning with the article's central theme.

- Mutual Grounding: A Foundation for Human-AI Collaboration - Stanford University Human-Computer Interaction Seminar (video, 2024-10-25)

- The original source presentation from which the article's core ideas are derived.

Appendices

Glossary

- Mutual Grounding: A concept from communication theory where participants in a dialogue establish a shared understanding. In the context of AI, it refers to the process of aligning the AI's interpretation of a task with the human user's actual intent through interactive communication.

- Logits: In a neural network, logits are the raw, un-normalized scores that are output by the final layer. These scores are then typically passed through a function (like softmax) to be converted into probabilities, which determine the likelihood of each possible next word or token.

- Vibe Coding: A colloquial term for programming by giving high-level, natural language instructions to an AI coding assistant, relying on the AI to interpret the user's intent or 'vibe' and generate the corresponding code.

Contrarian Views

- The 'Scaling Hypothesis' suggests that many of the current flaws in LLMs, including misinterpreting instructions, are emergent properties of their current scale. Proponents argue that with vastly larger models and more data, these communication issues will resolve themselves without needing specialized interaction paradigms.

- Some argue that investing in better formal languages and verification tools, in the tradition of Dijkstra, is a more robust long-term solution than trying to accommodate the inherent ambiguity of natural language.

- Over-reliance on these conversational tools for coding could hinder the development of fundamental programming skills in novices, creating a generation of developers who can prompt an AI but cannot read, write, or debug complex code from first principles.

Limitations

- The techniques described are primarily focused on task-oriented, single-turn or short-session programming problems. They may be less effective for large-scale, long-term software engineering projects that involve complex architectural decisions and evolving requirements.

- The effectiveness of these methods still depends on the underlying capability of the foundation model. A model with poor reasoning abilities will still produce poor results, even with better interaction design.

- There is a risk of increased cognitive load on the user if the conversational interactions become too frequent or complex, potentially negating the time saved by using the AI in the first place.

Further Reading

- A Survey of Large Language Models for Code: Evolution, Benchmarking, and Future Trends - https://arxiv.org/abs/2401.04234

- What Is It Like to Program with a Code-Generating AI? - https://cacm.acm.org/magazines/2024/3/279829-what-is-it-like-to-program-with-a-code-generating-ai/fulltext

Recommended Resources

- Signal and Intent: A publication that decodes the timeless human intent behind today's technological signal.

- Blue Lens Research: AI-powered patient research platform for healthcare, ensuring compliance and deep, actionable insights.

- Outcomes Atlas: Your Atlas to Outcomes — mapping impact and gathering beneficiary feedback for nonprofits to scale without adding staff.

- Lean Signal: Customer insights at startup speed — validating product-market fit with rapid, AI-powered qualitative research.

- Qualz.ai: Transforming qualitative research with an AI co-pilot designed to streamline data collection and analysis.

Ready to transform your research practice?

See how Thesis Strategies can accelerate your next engagement.