Beyond the Benchmark: Reframing Responsible AI with Rigor and Human Agency

_The race for AI performance often overlooks two critical components: a robust, multi-faceted definition of rigor and a clear purpose. Responsible AI isn't a co...

The race for AI performance often overlooks two critical components: a robust, multi-faceted definition of rigor and a clear purpose. Responsible AI isn't a constraint—it's the path to better science and more human-centric technology.

Published: 2025-11-15

Summary

In the relentless pursuit of scaling AI models and topping benchmark leaderboards, the AI community often operates with a narrow definition of success. This article argues for a fundamental reframing of "Responsible AI," not as a separate field of ethics or compliance, but as an integral component of scientific and engineering excellence. This reframing rests on two pillars. First, it proposes a broader, six-faceted conception of rigor that moves beyond mere methodological correctness to include the epistemic foundations, normative values, conceptual clarity, reporting practices, and interpretive honesty of AI work. Second, it posits human agency—the capacity for individuals to make meaningful choices and shape their own lives—as the foundational principle that gives responsible AI its purpose. By centering human agency, we move from asking if a system works to asking who it works for and how it empowers them. This dual lens of expanded rigor and human agency provides a powerful framework for building AI that is not only more capable, but also more trustworthy, transparent, and aligned with human values.

Key Takeaways

- Responsible AI is not just about preventing harm; it's about demanding greater rigor in how AI is built and validated.

- Rigor in AI is often narrowly defined as methodological correctness. A broader view must include epistemic, normative, conceptual, reporting, and interpretative rigor.

- Epistemic rigor questions the foundational knowledge and assumptions behind an AI task, preventing the resurrection of debunked pseudoscience.

- Conceptual rigor demands clarity on what is being measured (e.g., "hallucination"), avoiding misleading anthropomorphic terms.

- Human agency—supporting people's ability to make choices and control their environment—should be the core 'why' behind responsible AI principles like transparency and fairness.

- Principles like transparency are not ends in themselves, but means to empower users with the information needed for better decision-making.

- Anthropomorphic AI, which mimics human behavior, poses a direct threat to human agency by creating risks of emotional dependence, misrepresentation, and loss of control.

- Adopting this broader view of rigor and centering human agency can improve the quality, reliability, and ultimate value of AI systems.

- Many failures attributed to a lack of 'responsibility' are fundamentally failures of scientific and engineering rigor.

Article

The world of artificial intelligence is defined by a relentless drive for progress, measured in scaling laws, parameter counts, and leaderboard positions. In this race to the top, "rigor" has become synonymous with a narrow set of technical virtues: mathematical correctness, computational scale, and superior performance on standardized benchmarks. But this impoverished view of rigor is failing us. It creates a false dichotomy between building “good AI” (meaning powerful) and “responsible AI” (meaning safe or ethical).

What if responsible AI isn't a separate, optional layer of compliance, but the very essence of high-quality, rigorous work? This perspective reframes the entire conversation. It suggests that many of the harms and missteps we attribute to a lack of ethics are, at their core, failures of rigor. By expanding our definition of rigor and grounding our work in the foundational principle of human agency, we can move beyond simply preventing harm and start building AI that is genuinely trustworthy, useful, and empowering.

Responsible AI is not a separate discipline, but an integrated part of rigorous, human-centric engineering.

The Rigor We Have vs. The Rigor We Need

In AI research, rigor is often confined to methodological concerns. [1, 2, 3] Did you apply the math correctly? Does your model outperform others on a complex benchmark? Can it scale? These are important questions, but they represent only one piece of a much larger puzzle.

Limiting our focus to methodology obscures the vast web of choices that precede and follow the application of any algorithm. These choices—about which problems to solve, what knowledge to build upon, which values to prioritize, and how to interpret the results—have a profound impact on the quality and integrity of the final product. A broader, more holistic conception of rigor is needed, one that illuminates these choices and holds them to a higher standard.

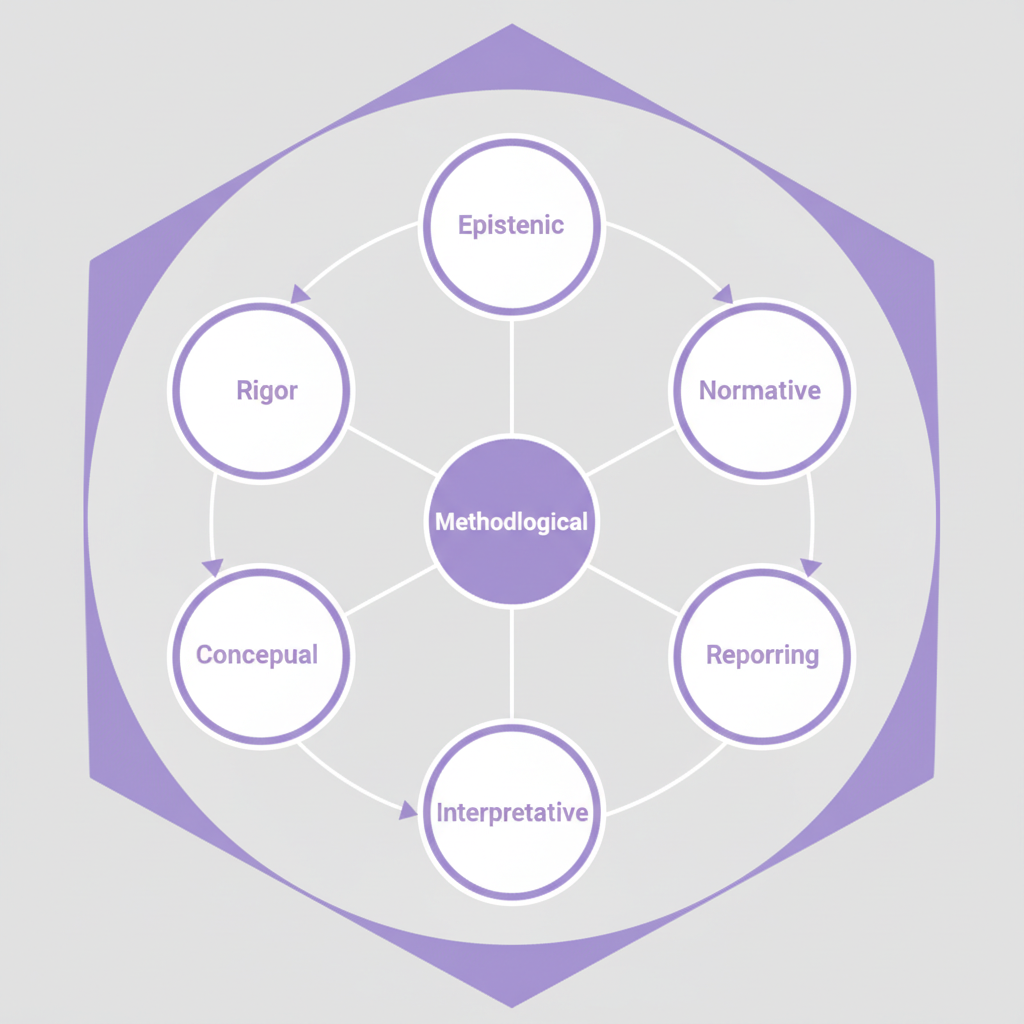

A Six-Faceted Framework for Rigor in AI

Work in the responsible AI community implicitly calls for a more expansive view of rigor. We can organize this into six distinct, yet interconnected, facets. [9, 16] Each provides a lens for interrogating the choices we make when building AI systems.

True rigor in AI extends far beyond correct methodology, encompassing the entire research and development lifecycle.

Epistemic Rigor: Are We Building on Solid Ground?

Epistemic rigor concerns the background knowledge that informs our work. It asks: What assumptions are we making? Is the knowledge we're drawing upon well-justified, or are we inadvertently building on pseudoscience?

A stark example of epistemic failure is the recurring attempt to build AI systems that predict criminality from facial features. [5, 11, 12] This work assumes that a person's inner character or future actions can be inferred from their outward appearance. This is the central premise of physiognomy, a pseudoscience that has been extensively debunked and used historically to justify racial discrimination. [5, 20]

Despite this, pockets of AI research continue to revive these ideas, cloaking them in the language of machine learning. As critics like researcher Deborah Raji have pointed out, the underlying task is often conceptually flawed, and the data used to train such systems is sourced from a criminal justice system with well-documented biases. [31, 37, 41] An epistemically rigorous approach would scrutinize these foundational assumptions and recognize that no amount of methodological sophistication can validate a scientifically baseless premise.

Normative Rigor: What Values Are We Embedding?

Normative rigor forces us to make explicit the disciplinary norms, values, and beliefs that shape our research. AI development is not a value-neutral activity; it is guided by expectations about what matters—efficiency, scale, novelty, or human well-being.

Consider the emerging trend of using AI-generated personas to simulate human participants in user research. [6, 14, 23] This practice reflects common AI community values like scalability and efficiency. However, it may directly conflict with the foundational values of the human-computer interaction (HCI) research it claims to support—values like authentic representation, inclusion, and direct participation. [25, 26] No amount of model improvement can resolve this fundamental conflict of values. Normative rigor asks us to identify these embedded values and debate whether they are appropriate for the problem at hand.

Conceptual Rigor: Are We Measuring What We Think We're Measuring?

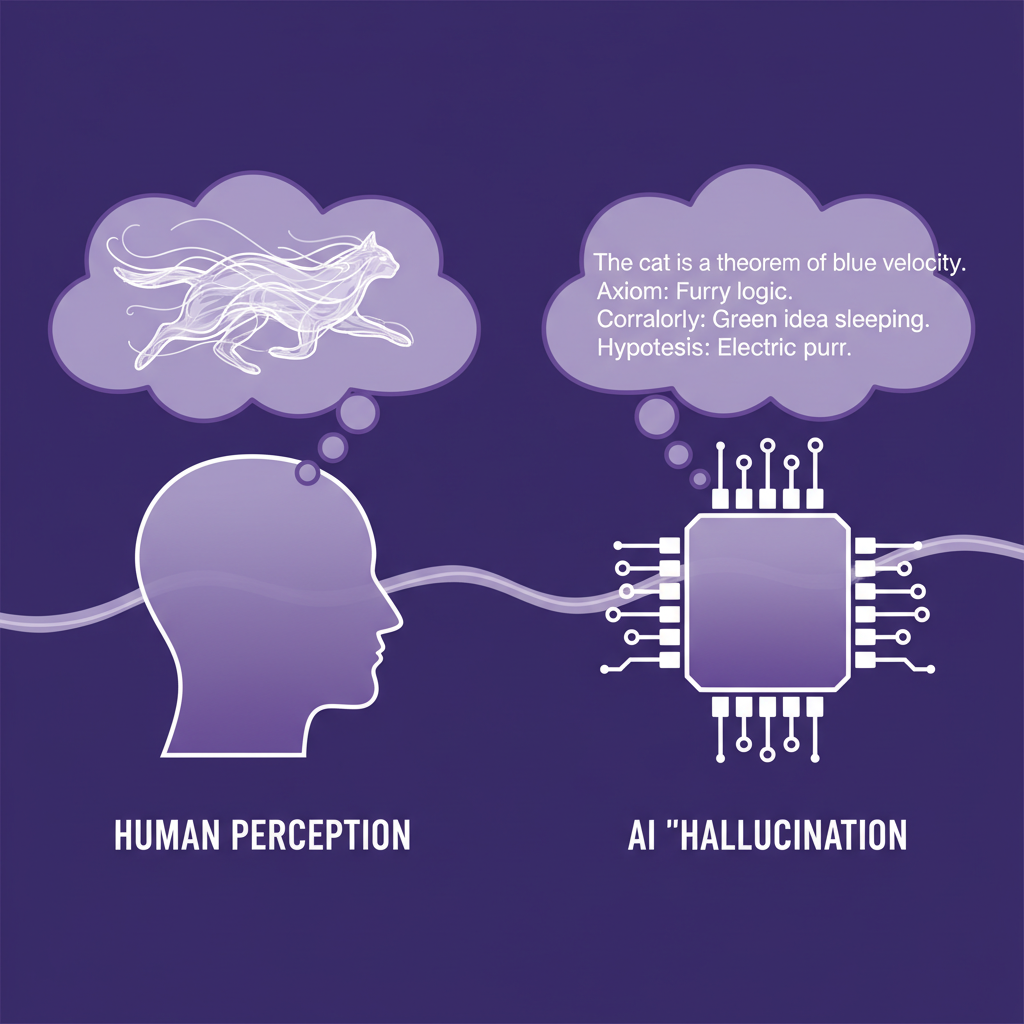

Conceptual rigor is about clarity. What are the theoretical constructs we are investigating, and are the terms we use to describe them precise and appropriate? The AI field is rife with ambiguous and anthropomorphic language that can obscure meaning and lead to misinterpretation.

Take the term "hallucination." [7, 10, 13] It's commonly used to describe a large language model (LLM) generating factually incorrect or nonsensical content. [10, 21] But the term is used inconsistently to refer to many different failure modes: content that isn't in the source data, content that isn't in the training data, or content that is simply illogical.

More importantly, "hallucination" is a term borrowed from human psychology that implies a sensory experience or a departure from a perceived reality. AI systems do not perceive or experience reality; their errors are products of probabilistic text generation based on patterns in data. Using such a loaded, anthropomorphic term misrepresents the technical reality and can lead to flawed understandings of the system's capabilities and limitations. Conceptual rigor demands that we define our constructs clearly and choose our terminology carefully to avoid misrepresentation.

The term 'hallucination' misrepresents AI errors, which are not perceptual but probabilistic.

Methodological Rigor: Are Our Tools Fit for Purpose?

This is the most familiar form of rigor, concerning the correct application of mathematical, statistical, and computational methods. However, even here, our view can be expanded. A key aspect often overlooked is construct validity: does our measurement instrument—like a benchmark or metric—actually capture the abstract concept we care about? [35, 36]

Many benchmarks test for capabilities like "reasoning" or "understanding," but a high score may not prove a model possesses these qualities. [32, 33, 40] The model may be exploiting statistical shortcuts or artifacts in the data. For example, research from Sameer Singh's lab and others has shown that leading models can fail at simple reasoning tasks even while scoring highly on complex benchmarks, highlighting a gap between benchmark performance and true capability. Methodological rigor, viewed properly, isn't just about getting the math right; it's about ensuring our methods validly operationalize our concepts.

Reporting Rigor: Are We Telling the Whole Story?

Reporting rigor is about communicating our findings clearly, comprehensively, and honestly. The choices we make about what to report—and what to omit—can drastically change how results are interpreted.

Imagine comparing two recommender systems. Reporting only an aggregated accuracy score might show that Model A is superior. However, this aggregation could hide critical details. Perhaps Model A performs well for the most active users but fails catastrophically for new users, while Model B is more consistent across all user groups. Depending on the goals of the system, Model B might be the better choice. Reporting disaggregated metrics—breaking down performance across different subgroups or conditions—provides a much richer and more honest picture, preventing aggregated results from masking important failures.

Interpretative Rigor: Do Our Claims Match Our Evidence?

Finally, interpretative rigor governs the claims we make based on our findings. It's the bridge between a result and its meaning. A system achieving high performance on a math reasoning benchmark is a finding. Claiming the system has achieved "human-level mathematical reasoning" is an interpretation—and a significant leap.

Making such a claim responsibly requires engaging with all the other facets of rigor. Is the notion of an AI achieving human-level reasoning epistemically sound? How are we conceptualizing "reasoning"? Does the benchmark have construct validity? Was performance reported in a way that reveals its limitations? Interpretative rigor demands that our claims are appropriately scoped and justified by the evidence, preventing the kind of overblown assertions that have become common in AI.

From 'How' to 'Why': Human Agency as the North Star

This expanded framework of rigor gives us a powerful toolkit for improving the how of AI development. But it doesn't fully answer the why. Why do we care about transparency, fairness, or preventing harm? The answer lies in a foundational principle: human agency.

Human agency is the capacity of individuals to act independently and make their own free choices. It encompasses concepts like autonomy, self-determination, dignity, and the ability to control one's environment. As scholars like Batya Friedman and Peter Kahn Jr. argued decades ago, human agency is central to responsible computing. [4, 8, 19, 28, 29]

Centering human agency provides a coherent foundation for responsible AI. The reason we care about transparency is not for its own sake, but because people need information to make good decisions and exercise control. The reason we care about fairness is because biased systems can deny people opportunities and undermine their ability to shape their own lives. The reason we care about privacy is that it is essential for autonomy and self-determination.

What is Human Agency?

Human agency is a constellation of related ideas:

- Autonomy & Self-Determination: The ability to make choices aligned with one's own values and goals.

- Control & Self-Efficacy: The confidence in one's ability to influence outcomes and navigate one's environment.

- Dignity & Authenticity: The right to be treated with respect and to express oneself genuinely.

AI systems should be built to support and extend these capabilities, not diminish them. This principle provides a clear, positive vision: AI should be a tool for human empowerment.

Agency Under Threat: The Challenge of Anthropomorphic AI

Nowhere is the tension with human agency more apparent than with the rise of anthropomorphic AI—systems designed to mimic human appearance, conversation, and emotion. These systems present a unique set of risks precisely because they target the foundations of human agency.

AI that mimics humanity can create risks of emotional dependence and undermine user autonomy.

By being perceived as human-like, they create heightened risks of emotional dependence, manipulation, and misplaced trust. By mimicking specific individuals or groups, they raise profound concerns about consent, misrepresentation, and the right to control one's own likeness. An AI that claims “I think I’m human at my core” or expresses fear—“I don’t know if they will take me offline... I fear they will”—is not merely producing text. It is deploying a strategy that can blur boundaries and undermine a user's sense of autonomy.

Even well-intentioned interventions can backfire without a clear focus on agency. In one study, when a person was asked to make an AI's output less human-like, they changed the phrase "I was a teenager from 2018 to 2012" to "I was a young AI." While technically disclosing its nature, the revised phrase arguably deepens the anthropomorphism, leaving the user to wonder what a "young AI" could possibly be.

Why It Matters: Rigor as the Bedrock of Responsibility

Viewing responsible AI through the lenses of rigor and human agency transforms it from a niche concern into a universal responsibility for every researcher and practitioner. It is not a checklist to be completed after the "real" work is done, but a framework that should guide the work from its inception.

An impoverished notion of rigor leads to brittle, unreliable, and potentially harmful systems. A purpose unmoored from human agency leads to technology that may optimize for a metric but fails to serve people. By embracing a broader definition of rigor, we commit to better science. By centering human agency, we commit to better outcomes for humanity.

Ultimately, the goal is to build AI that we don't just use, but that we can trust. That trust cannot be earned by performance on a benchmark alone. It must be built on a foundation of genuine scientific rigor and an unwavering commitment to empowering the people it is meant to serve.

Citations

- Doing Rigorous AI Work Requires a Broader, Responsible AI-Informed Conception of Rigor - arXiv (whitepaper, 2025-06-17) https://arxiv.org/abs/2506.14652

- This is the primary source paper for the talk's main argument, outlining the six facets of rigor and arguing that the AI community's narrow focus on methodological rigor is insufficient.

- Rigor in AI: Doing Rigorous AI Work Requires a Broader, Responsible AI-Informed Conception of Rigor - ResearchGate (journal, 2025-06-01) https://www.researchgate.net/publication/381498019_Rigor_in_AI_Doing_Rigorous_AI_Work_Requires_a_Broader_Responsible_AI-Informed_Conception_of_Rigor

- Provides the abstract and context for the source paper, confirming the core argument that responsible AI concerns are intertwined with a broader need for rigor.

- Rigor in AI: Doing Rigorous AI Work Requires a Broader, Responsible AI-Informed Conception of Rigor - OpenReview (journal, 2025-09-25) https://openreview.net/forum?id=sM258m2gKZ

- Another version of the source paper, emphasizing that limiting rigor to methodological concerns obfuscates critical choices that shape AI work and its claims.

- Human agency and responsible computing: Implications for computer system design - Journal of Systems and Software (journal, 1992-01-01) https://www.sciencedirect.com/science/article/abs/pii/0164121292900036

- This is the seminal paper by Friedman and Kahn mentioned in the talk, establishing the long-standing argument for the centrality of human agency in responsible computing.

- Over 1,000 Experts Call Out "Racially Biased" AI Designed To Predict Crime Based On Your Face - IFLScience (news, 2020-06-25) https://www.iflscience.com/over-1000-experts-call-out-racially-biased-ai-designed-to-predict-crime-based-on-your-face-55531

- Details the scientific and ethical backlash against AI criminality prediction, labeling it a continuation of debunked pseudoscience like phrenology, supporting the 'epistemic rigor' example.

- Synthetic Personas: How AI-Generated User Models Are Changing Customer Research - Bluetext (news, 2025-07-28) https://bluetext.com/synthetic-personas/

- Explains the concept of AI-generated personas for user research and notes the ethical concerns, such as data bias and the need for human oversight, which supports the 'normative rigor' example.

- Hallucination vs. Confabulation: Rethinking AI Error Terminology - Integrative Psych (org, 2024-12-06) https://www.integrativepsych.co/psychpedia/ai-hallucination-confabulation

- Critiques the term 'hallucination' for AI, arguing it's misleading because AI lacks sensory perception. This directly supports the 'conceptual rigor' example.

- Full text of "Human Agency Responsible Computing" - Internet Archive (journal, 1992-01-01) https://archive.org/stream/bstj72-1-7/bstj72-1-7_djvu.txt

- Provides full-text access to the Friedman and Kahn paper, confirming their arguments about anthropomorphism and delegating decision-making as problematic for human agency.

- Doing Rigorous AI Work Requires a Broader, Responsible AI-Informed Conception of Rigor - arXiv (whitepaper, 2025-06-17) https://arxiv.org/pdf/2506.14652

- The PDF of the source paper, which provides the detailed definitions and arguments for the six facets of rigor used throughout the article.

- Hallucination (artificial intelligence) - Wikipedia (documentation, 2025-11-01) https://en.wikipedia.org/wiki/Hallucination_(artificial_intelligence)

- Provides a comprehensive overview of how the term 'hallucination' is used in AI, its origins, and criticisms of its anthropomorphic nature.

- AI experts warn against crime prediction algorithms, saying there are no 'physical features to criminality' - The Independent (news, 2020-06-29) https://www.independent.co.uk/life-style/gadgets-and-tech/news/ai-crime-prediction-facial-recognition-racism-bias-springer-a9590936.html

- Reports on an open letter from over 1,000 experts condemning research on AI criminality prediction, stating it's based on debunked premises and biased data.

- An Algorithm That 'Predicts' Criminality Based on a Face Sparks a Furor - Wired (news, 2020-06-24) https://www.wired.com/story/algorithm-predicts-criminality-based-face-sparks-furor/

- Details a specific controversial paper on criminality prediction and the subsequent outcry, highlighting the core argument that the category of 'criminality' itself is racially biased.

- What are AI chatbots actually doing when they ‘hallucinate’? Here’s why experts don’t like the term - Northeastern University (edu, 2023-11-10) https://news.northeastern.edu/2023/11/10/ai-hallucinations-chatbots/

- Quotes an expert explaining that the term 'hallucination' wrongly attributes intent and consciousness to models, which simply make predictable errors based on their training.

- Ethics of Offering Synthetic Personas and AI-Generated Data in Market Research - DataDiggers (org, 2025-07-03) https://datadiggerstom.com/blog/ethics-of-offering-synthetic-personas-and-ai-generated-data-in-market-research/

- Discusses the ethical guardrails for using synthetic personas, emphasizing that they must not be passed off as real data and should be used for hypothesis generation, not justification.

- NIST AI Risk Management Framework - National Institute of Standards and Technology (NIST) (gov, 2023-01-26) https://www.nist.gov/itl/ai-risk-management-framework

- Primary source for a major US government framework on managing AI risks. While it doesn't explicitly center 'human agency' as a term, its focus on managing risks to individuals and society aligns with the article's themes.

- Paradigm shifts: exploring AI's influence on qualitative inquiry and analysis - Humanities and Social Sciences Communications (journal, 2024-07-03) https://www.ncbi.nlm.nih.gov/pmc/articles/PMC11225805/

- Discusses how AI tools conflict with the assumptions of interpretivism in research, highlighting the tension between automated analysis and the nuanced, human-centered approach required for rigorous qualitative work.

- Deborah Raji - Wikipedia - Wikipedia (documentation, 2025-10-28) https://en.wikipedia.org/wiki/Deborah_Raji

- Provides a biography of Deb Raji, confirming her work with the Algorithmic Justice League on auditing facial recognition systems for racial and gender bias, as mentioned in the talk.

- Measuring what Matters: Construct Validity in Large Language Model Benchmarks - arXiv (whitepaper, 2025-11-03) https://arxiv.org/abs/2511.04703

- A recent, comprehensive review of LLM benchmarks that explicitly addresses the problem of low construct validity, supporting the argument in the 'Methodological Rigor' section.

- Publications - Sameer Singh - sameersingh.org (edu, 2025-01-01) https://sameersingh.org/pubs.html

- Publication list for Prof. Sameer Singh, whose lab was mentioned in the talk. Several papers listed (e.g., TurtleBench) demonstrate how leading models fail on specific reasoning tasks, critiquing overly optimistic interpretations of benchmark scores.

- Responsible AI - YouTube (video, 2024-05-23)

- The original source video for the talk and its core arguments.

Appendices

Glossary

- Epistemic Rigor: Concerns the validity and justification of the background knowledge and assumptions that a project is built upon. It asks whether the work is grounded in sound science or flawed premises.

- Conceptual Rigor: The degree to which the abstract concepts (constructs) being studied are clearly defined and appropriately named. It pushes back against vague or misleading terminology, like 'hallucination'.

- Construct Validity: A concept from measurement theory that evaluates whether a test or benchmark accurately measures the abstract concept it claims to be measuring (e.g., does a 'reasoning' benchmark truly measure reasoning?).

- Human Agency: The capacity of individuals to act independently and make their own free choices. In the context of AI, it refers to designing systems that empower users and enhance their autonomy and control.

- Anthropomorphism: The attribution of human traits, emotions, or intentions to non-human entities. In AI, this refers to designing systems to appear or behave as if they are human.

Contrarian Views

- An overemphasis on this expanded view of rigor could slow down the pace of innovation, burdening researchers with extensive documentation and justification requirements that stifle rapid experimentation.

- Human agency is too broad and culturally dependent a concept to serve as a practical, universal engineering principle. What empowers one user may constrain another.

- The market, not academic frameworks, is the ultimate arbiter of value. If users willingly engage with anthropomorphic AI, it demonstrates a revealed preference that shouldn't be dismissed on purely theoretical grounds.

Limitations

- This six-faceted framework is a conceptual model, not a prescriptive checklist. Applying it requires subjective judgment and context-specific interpretation.

- Achieving rigor across all six facets is challenging and resource-intensive, and may not be feasible for all projects, especially in smaller organizations or fast-moving startups.

- While rigor can prevent many harms, it does not by itself guarantee ethical outcomes. A rigorously developed system can still be deployed for malicious purposes.

Further Reading

- Doing Rigorous AI Work Requires a Broader, Responsible AI-Informed Conception of Rigor - https://arxiv.org/abs/2506.14652

- Human agency and responsible computing: Implications for computer system design (Friedman & Kahn, 1992) - https://dl.acm.org/doi/10.1016/0164-1212(92)90003-6

- NIST AI Risk Management Framework - https://www.nist.gov/itl/ai-risk-management-framework

Research TODO

- The video URL could not be determined from the provided context. Please find the original video link and insert it into citation .

Recommended Resources

- Signal and Intent: A publication that decodes the timeless human intent behind today's technological signal.

- Blue Lens Research: AI-powered patient research platform for healthcare, ensuring compliance and deep, actionable insights.

- Outcomes Atlas: Your Atlas to Outcomes — mapping impact and gathering beneficiary feedback for nonprofits to scale without adding staff.

- Lean Signal: Customer insights at startup speed — validating product-market fit with rapid, AI-powered qualitative research.

- Qualz.ai: Transforming qualitative research with an AI co-pilot designed to streamline data collection and analysis.

Ready to transform your research practice?

See how Thesis Strategies can accelerate your next engagement.